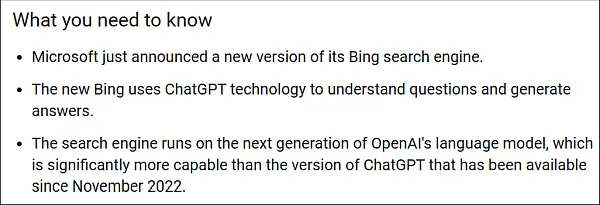

We all know (unless you have had your head under a pillow) that ChatGPT is an artificial intelligence system that among its capabilities it can write a love poem, and academic paper, computer code or even your homework. It can also answer your questions – just ask Bing!

ChatGPT AI is based on Large Language Models (LLMs) – that is how it answers your questions – and how it uses previous inputs and answers to create future answers and outputs. See the trouble this has caused for organisations who use ChatGPT to refine their code:

It took nearly no time for the mischievous or malicious to work that they could influence the output from ChatGPT. Here is a paper looking at this issue of maliciously influencing LLM outputs – including those from ChatGPT:

A complicated title that could mean your future answers from search engine will not be the truth but the fake information others want you to see!

Clive Catton MSc (Cyber Security) – by-line and other articles

Further Reading

More Big Tech LLM/AI issues:

TechScape: Will Meta’s massive leak democratise AI – and at what cost? | Meta | The Guardian